OK this is an unstructured post but I'm just going to leave it here for when I forget what I did.

I have a site with a Okla Speed up/down rating of around 2Mbps.

On that site is an SBS 2011 Box which includes a Exchange 2010 Server with about 11 years of email on it.

The mailbox export is done using PowerShell:

$export_date = '201705302347'

$export_location = "\\server\share\"

$export_names = @("usermailboxaliasname" , "anothermailboxalias")

ForEach($name in $export_names) {

New-MailboxExportRequest -Mailbox $name `

-FilePath ($export_location + $name + $export_date + ".pst") `

-BadItemLimit 1000 -AcceptLargeDataLoss

# definately need a high bad limit and acceptlargedataloss

# because mailboxes that are over 10yrs old

# have a lot of cruft and of asian and other language character sets

# that the export chokes on

}

The mail box export takes an hour or so for 28GB

I can't get the PST offsite as a monolith so I md5sum it, cut it into chunks with 7-Zip and then download the chunks and reassemble.

The problem is downloading it to a site with Australian ADSL means that your upload would be around a month to get it back up into the cloud.

So I tried to send the PST straight to Office365 with AZCopy but the program just times out even if I use /NC:2

:: split across lines for readability "C:\Program Files (x86)\Microsoft SDKs\Azure\AzCopy\AzCopy.exe" /Source:"Z:\Downloads\PST Export Folder\transfer" /Dest:"https://kajdfaajfaaf89f89.blob.core.windows.net/ingestiondata?sv=2012-02-12&se=2017-06-12T02%3A09%3A00Z&sr=c&si=IngestionSasForAzCopy201705310208574840&sig=ez98908339083s890EcqJok9U%3D" /V:"Z:\Downloads\PST Export Folder\azcopy.log"

So that is a fail.

So next step is try and get the chunks up into the cloud and re-assemble them and then use the fat pipes of the cloud provider to hopefully re-try the azcopy to the Office 365 Azure storage location. So using AWS Powershell for this.

Found that you need to do a bit of setup with this, but it's pretty straight forward if you follow their excellent documentation. Also needed to upgrade to Windows Management Something or Other 5.1 to get the latest version of PowerShell. Some notes from the PowerShell initial config and use:

# make the loading of the AWSPowerShell

# permanent so you don't have to keep adding it

# add Import-Module command below to the file target

# specified by running notepad $profile

# I had to create some folders in the directory path to allow the $profile

# to be created

Import-Module AWSPowerShell

# You need to set you credentials and store them

Set-AWSCredentials -AccessKey {AKIAIOSFODNN7EXAMPLE} -SecretKey {wJalrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY} -StoreAs {MyProfileName}

# this each time you run powershell you need to set them as the default

Set-AWSCredentials -ProfileName {MyProfileName}

# other wise doing a Get-S3Bucket errors out

Get-S3Bucket

# once you can successfully list your buckets then you can upload

# your chunks

Write-S3Object -Folder .\ -SearchPattern *.7z.* -BucketName my-fantastic-bucket

From getting the chunks into an Amazon bucket it should be an easy matter to recombine them into the huge PST and then re-try azcopy

Within the Office 365 admin console to get to the Mailbox Import Wizard takes some doing. My default Office 365 admin account had to be given the "Mailbox Export Import" role... Which was buried in what felt like desktop-style-admin-gui-converted-to-web-dialog-screens. I found it a bit of a clickety clickety fest to find the areas I needed. So the documentation had to be followed.

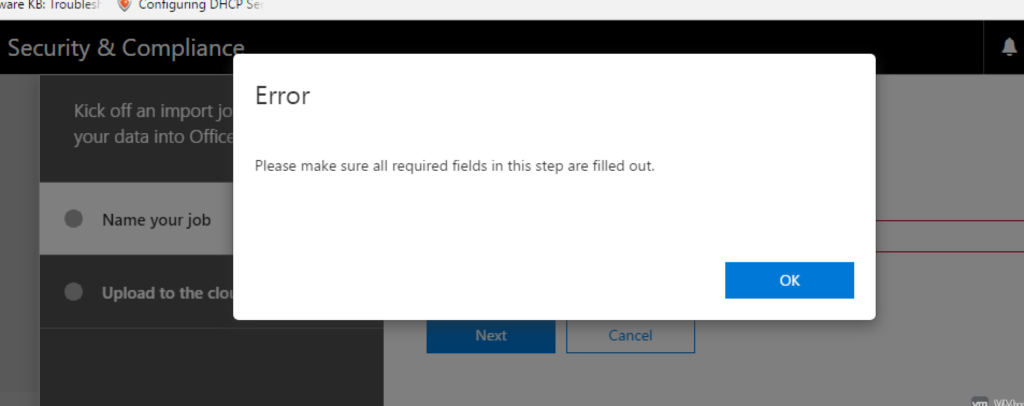

From the default login "Security & Compliance" ==> Data Governance ==> Import from there you click the "New Import Job" button and enter a Import Job Name. I was entering JMITSMailboxImport and sadly the M$ error tooltips weren't displaying and therefore I wasn't seeing the warning to make it all lowercase. So after multiple attempts and googles and reading the manual I discerned that jmits-mailbox-import would work.

From there I got the Azure upload URL but am still stuck uploading chunks to a faster network which may then allow the azcopy to work... incidentally there seems to be a linux version of AZCopy.exe now ==> https://azure.microsoft.com/en-au/blog/announcing-azcopy-on-linux-preview/ I hope it works, and I hope I can successfully get the PST into the Import console....

So at 20 to 1 I'm now going to bed to dream of Office 365 transfers.

So the Write-S3Object PowerShell stuff works really well. I found however that once you start the command and even if you hit CTRL-C it just keeps doing the upload. Which I found odd.

So 21 chunks arrive in S3 on amazon.

So Install an Amazon Linux t2.medium EC2 Instance (4GB RAM) with a 120GB EBS volume and do all the stuff to make the EBS volume writeable mkfs -t ext4 /dev/xvdb etc. Give it an s3 read role.

In hindsight I probably should have chosen something with a more Processor/RAM so as to speed up the 7z unzip.

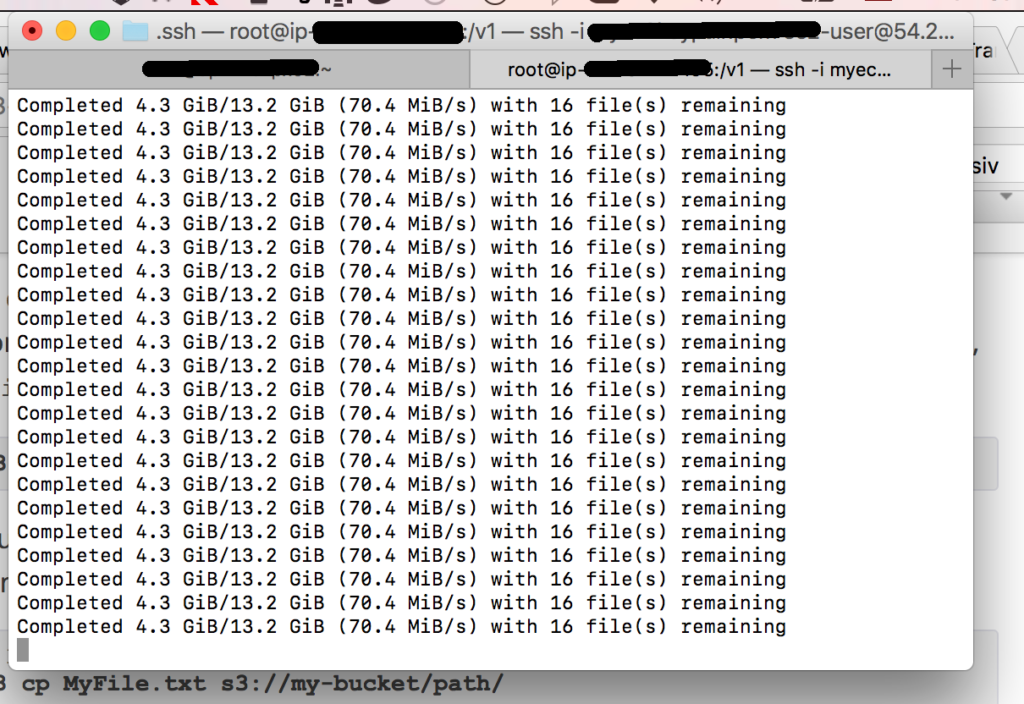

Using aws s3 cp s3://bucket-name . --recursive pull the chunks down

And then recombine them into a 7zip archive and then enable epel as follows so that I can install p7zip (yum install p7zip) to unzip the resulting 7z archive.

https://gist.github.com/marcesher/7168642#file-gistfile1-txt

To get the Linux version of azcopy working you need to install dot net core

https://www.microsoft.com/net/core#linuxcentos

And then azcopy

wget -O azcopy.tar.gz https://aka.ms/downloadazcopyprlinux tar -xf azcopy.tar.gz sudo ./install.sh azcopy

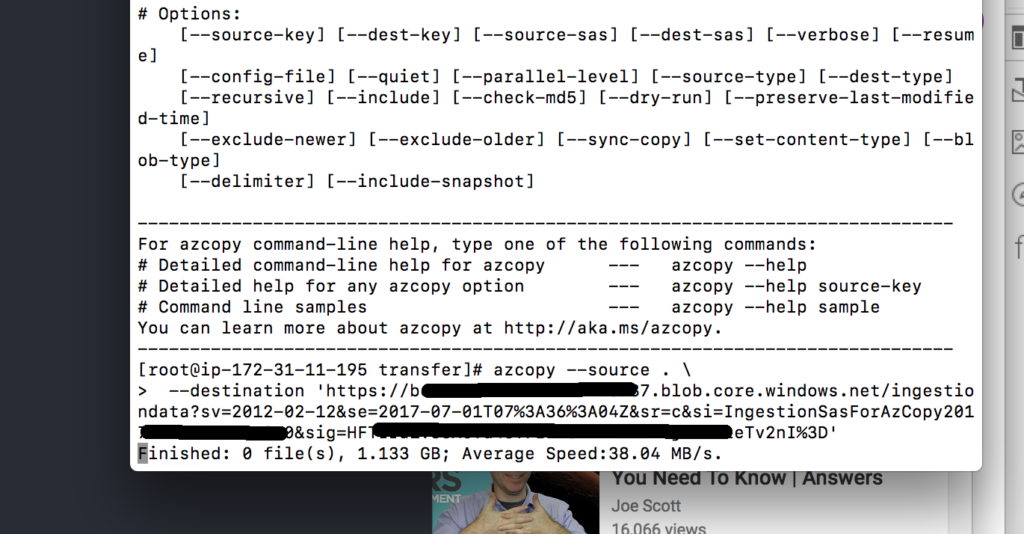

Finally you can do the upload just make a directory and move the PST or PST's into it, then cd to it and run azcopy on Linux

azcopy --source . \

--destination 'https://bbcccffff9894183836843897cc.blob.core.windows.net/ingestiondata?sv=2012-02-12&se=2017-07-01T07%3A36%3A04Z&sr=c&si=IngestionSasForAzCopy20170531020844440&sig=adfadfaa%3D'

Using Amazons infrastructure will allow the transfer using azcopy to complete without timing out.

0 Comments