BlobFuse2 is a Microsoft maintained utility to mount a remote Azure Storage Account Blob Container or folder into the Linux file system and then interact with it as if it was local.

You need some directories to serve as a cache and mount point. You could put them anywhere such as in your home directory but I have created a 120GB disk an mounted it as /u1

| Cache Directory | Azure Storage Account Container Mount Point |

| /u1/blobfuse/cache | /u1/azure |

The Cache Directory stores files that you copy into the mount point before they get up loaded to Azure

Download and install Microsoft apt repository configuration

wget https://packages.microsoft.com/config/ubuntu/22.04/packages-microsoft-prod.deb

dpkg -i ./packages-microsoft-prod.deb

apt-get update

apt-get install blobfuse2

Modify /etc/fuse.conf and uncomment user_allow_other

This allows more than just the user that mounted the storage account to access the files in Azure.

# presence of the option.

user_allow_other

# mount_max = n - this option sets the maximum number of mounts.

Config blobfuse2.yaml

# Refer ./setup/baseConfig.yaml for full set of config parameters

allow-other: true

logging:

type: syslog

level: log_debug

components:

- libfuse

- file_cache

- attr_cache

- azstorage

libfuse:

attribute-expiration-sec: 120

entry-expiration-sec: 120

negative-entry-expiration-sec: 240

file_cache:

path: /u1/blobfuse/cache

# timeout-sec: 120

max-size-mb: 8192

cleanup-on-start: true

allow-non-empty-temp: true

stream:

block-size-mb: 8

blocks-per-file: 3

cache-size-mb: 1024

attr_cache:

timeout-sec: 7200

azstorage:

type: block

account-name: mystorageaccount

# account-key: mystoragekey

endpoint: https://mystorageaccount.blob.core.windows.net

mode: sas

container: mycontainer

sas: ?st=_START_DATE_&se=_EXPIRY_DATE_&sp=racwdl&sv=2021-06-08&sr=c&sig=_SIG_HERE_

Manual mount command

blobfuse2 mount /u1/azure --config-file=./blobfuse2.yaml

Create a SAS signed by Access Key using az cli

az storage container generate-sas \

--account-name mystorageaccount \

--name mycontainer \

--permissions acdlrw \

--start 2022-12-06 \

--expiry 2024-12-07 \

--account-key "_STORAGE_ACCOUNT_ACCESS_KEY_"

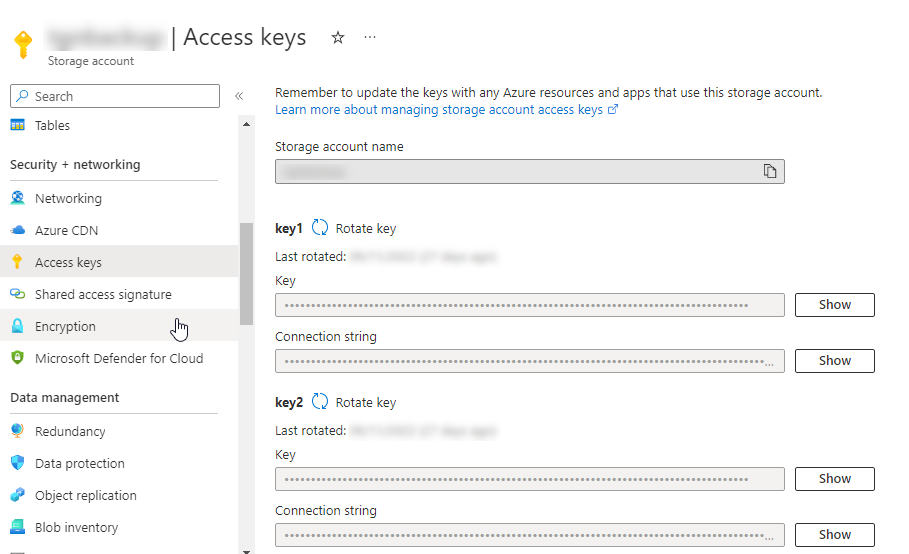

To get the _STORAGE_ACCOUNT_ACCESS_KEY_ from the Azure Portal

Show and copy the Key

Starting blobfuse2 at boot systemd

Edit to taste then copy the following into /etc/systemd/system/blobfuse2.service

sudo systemctl daemon-reload

Contents of blobfuse2.service file

Not sure if i’m doing it right but I had to add –foreground to get the service to run and stay up.

[Unit]

Description=A virtual file system adapter for Azure Blob storage.

After=network-online.target

Requires=network-online.target

WorkingDirectory=/u1/blobfuse2

[Service]

User=ja

Group=ja

Environment=BlobMountingPoint=/u1/azure

Environment=BlobTmp=/u1/blobfuse2/cache

Environment=BlobConfigFile=/u1/blobfuse2/blobfuse2.yaml

Environment=BlobLogLevel=LOG_WARNING

Environment=attr_timeout=240

Environment=entry_timeout=240

Environment=negative_timeout=120

Type=forking

ExecStart=/usr/bin/blobfuse2 mount ${BlobMountingPoint} --tmp-path=${BlobTmp} --config-file=${BlobConfigFile} --log-level=${BlobLogLevel}

ExecStop=/usr/bin/fusermount -u ${BlobMountingPoint}

[Install]

WantedBy=multi-user.target

Stopping rsync from copying everything back up to azure after a restart

Use --ignore-existing

Caution with --delete it will delete everything in target that is not in source

rsync -av --delete --ignore-existing /u1/backup/ofs/ /u1/azure/

0 Comments